Data

-> Information -> Knowledge -> Wisdom

Data

is a collection of raw facts from which conclusions may be drawn

Data

can be classified as Structured and Unstructured.

Majority

of the data being created is unstructured that is 80% to 90% and in future it

will increase further.

Structured

data is typically stored using a database management system (DBMS).

Data Center : Core

elements of data center are

Application

DBMS

Host

or Compute

Network

Storage

All

these components work together in Data Centre.

Storage

Centric Architecture – Storage is managed centrally and independent of servers

Module 2 – Data Centre

Environment

hypervisor

software VMware ESXi.

The

hypervisor abstracts CPU, memory, and storage resources to run multiple virtual

machines concurrently on the same physical server.

VMware

ESXi is a hypervisor that installs on x86 hardware to enable server

virtualization.s

All

the files that make up a VM are typically stored in a single directory on a

cluster file system called Virtual Machine File System (VMFS).

The

physical machine that houses ESXi is called ESXi host.

ESXi

has two key components: VMkernel and Virtual Machine Monitor.

VMkernelprovides

functionality similar to that found in other operating systems, such as process

creation, file system management, and process scheduling

The

virtual machine monitor is responsible for executing commands on the CPUs and

performing Binary Translation (BT).

Module

3 – Data Protection - RAID

RAID

techniques –striping, mirroring, and parity –form the basis for defining

various RAID levels.

Striping

is a technique of spreading data across multiple drives (more than one) in

order to use the drives in parallel. Striped RAID does not provide any data

protection unless parity or mirroring is used

Mirroring

is a technique whereby the same data is stored on two different disk drives,

yielding two copies of the data.

Mirroring

involves duplication of data—the amount of storage capacity needed is twice the

amount of data being stored. Therefore, mirroring is considered expensive and

is preferred for mission-critical applications that cannot afford the risk of

any data loss. Mirroring improves read performance because read requests can be

serviced by both disks. However, write performance is slightly lower than that

in a single disk because each write request manifests as two writes on the disk

drives. Mirroring does not deliver the same levels of write performance as a

striped RAID.

Parity is a method to protect striped data from disk

drive failure without the cost of mirroring. An additional disk drive is added

to hold parity, a mathematical construct that allows re-creation of the missing

data. Parity is a redundancy technique that ensures protection of data without

maintaining a full set of duplicate data. Parity calculation is a bitwise

XOR operation.

However,

there are some disadvantages of using parity. Parity information is generated

from data on the data disk. Therefore, parity is recalculated every time there

is a change in data. This recalculation is time-consuming and affects the

performance of the RAID array.

RAID

0 configuration uses data striping techniques, where data is striped across all

the disks within a RAID set. RAID 0 is a good option for applications that need

high I/O throughput.

RAID 0 does not provide data protection and availability.

RAID 0 does not provide data protection and availability.

RAID

1 is based on the mirroring technique. In this RAID configuration, data is

mirrored to provide fault tolerance. A RAID 1 set consists of two disk drives

and every write is written to both disks.

RAID 1 is suitable for applications that require high availability and cost is no constraint.

RAID 1 is suitable for applications that require high availability and cost is no constraint.

RAID

1+0 combines the performance benefits of RAID 0 with the redundancy benefits of

RAID 1. It uses mirroring and striping techniques and combine their benefits.

This RAID type requires an even number of disks, the minimum being four. RAID

1+0 is also known as RAID 10 (Ten) or RAID 1/0. RAID 1+0 is also called striped

mirror. The basic element of RAID 1+0 is a mirrored pair, which means that data

is first mirrored and then both copies of the data are striped across multiple

disk drive pairs in a RAID set.

RAID

3 stripes data for performance and uses parity for fault tolerance. Parity information

is stored on a dedicated drive so that the data can be reconstructed if a drive

fails in a RAID set. RAID 3alwaysreads and writes complete stripes of data

across all disks because the drives operate in parallel. There are no partial

writes that update one out of many strips in a stripe.

RAID

4, Similar to RAID 3 stripes data for high performance and uses parity for

improved fault tolerance. Data is striped across all disks except the parity

disk in the array. Parity information is stored on a dedicated disk. Unlike

RAID 3, data disks in RAID 4 can be accessed independently so that specific

data elements can be read or written on a single disk without reading or

writing an entire stripe. RAID 4 provides good read throughput and reasonable

write throughput. RAID 4 is rarely used.

RAID

5 is a versatile RAID implementation. It is similar to RAID 4 because it uses

striping. The drives (strips) are also independently accessible. The difference

between RAID 4 and RAID 5 is the parity location. In RAID 4, parity is written

to a dedicated drive, creating a write bottleneck for the parity disk. In RAID

5, parity is distributed across all disks to overcome the write bottleneck of a

dedicated parity disk.

RAID

6 works the same way as RAID 5, except that RAID 6 includes a second parity

element to enable survival if two disk failures occur in a RAID set. Therefore,

a RAID 6 implementation requires at least four disks. RAID 6 distributes the

parity across all the disks. The write penalty (explained later in this module)

in RAID 6 is more than that in RAID 5; therefore, RAID 5 writes perform better

than RAID 6. The rebuild operation in RAID 6 may take longer than that in RAID

5 due to the presence of two parity sets.

RAID

impact on performance: In both mirrored and parity RAID configurations, every

write operation translates into more I/O overhead for the disks, which is

referred to as a write penalty. It

is 4 (2 Read + 2 Write) for RAID5 and 6 (3 Read + 3 Write) for RAID 6

A hot spare refers to a

spare drive in a RAID array that temporarily replaces a failed disk drive by

taking the identity of the failed disk drive. A hot spare can be configured as

automatic or user initiated, which specifies how it will be used in the

event of disk failure.

MODULE 4

Intelligent Storage Systems: Intelligent storage systems are feature-rich

RAID arrays that provide highly optimized I/O processing capabilities. These

storage systems are configured with a large amount

of memory (called cache) and multiple I/O paths and use sophisticated

algorithms to meet the requirements of performance-sensitive applications.

Support

for flash drives and other modern-day technologies, such as virtual storage

provisioning and automated storage tiering,

An

intelligent storage system consists of four key components: front end,

cache, back end, and physical disks.

In

modern intelligent storage systems, front end, cache, and back end are

typically integrated on a single board ( referred as a storage processor

or storage controller).

Each

front-end controller has processing logic that executes the appropriate

transport protocol, such as Fibre Channel, iSCSI, FICON, or FCoE for storage

connections. Front-end controllersroute data to and from cache via the

internal data bus. When the cache receives the write data, the controller sends

an acknowledgment message back to the host.

Cache is semiconductor memory where data is

placed temporarily to reduce the time required to service I/O requests from the

host.

Cache

can be implemented as either dedicated cache or global cache. With dedicated

cache, separate sets of memory locations are reserved for reads and writes. In

global cache, both reads and writes can use any of the available memory

addresses.

Cache

management is more efficient in a global cache implementation because only one

global set of addresses has to be managed.

Cache Management

Algorithms

: Least Recently Used (LRU): discards the data that has not been accessed for

long time

Most Recently Used (MRU): This algorithm is the opposite of LRU, where the pages that have been accessed most recently are freed up or marked for reuse.

Most Recently Used (MRU): This algorithm is the opposite of LRU, where the pages that have been accessed most recently are freed up or marked for reuse.

Cache Management-

Watermarking : As cache fills, the storage system must take action to

flush dirty pages (data written into the cache but not yet written to the disk)

to manage space availability. Flushing is the process that commits data

from cache to the disk. On the basis of the I/O access rate and pattern, high

and low levels called watermarks are set in cache to manage the flushing

process.

Low watermark (LWM) is the point at which

the storage system stops flushing data to the disks.

Idle flushing:

Occurs continuously, at a modest rate, when the cache utilization level is

between the high and low watermark.

High watermark flushing:

Activated when cache utilization hits the high watermark.

Forced flushing:

Occurs in the event of a large I/O burst when cache reaches 100 percent of its

capacity, which significantly affects the I/O response time.

Cache Data Protection: Cache is

volatile memory, so a power failure or any kind of cache failure will cause

loss of the data that is not yet committed to the disk. This risk of losing

uncommitted data held in cache can be mitigated using cache mirroring and

cache vaulting:

Cache mirroring: Each write

to cache is held in two different memory locations on two independent memory

cards. In cache mirroring approaches, the problem of maintaining cache

coherency is introduced. Cache coherency means that data in two different

cache locations must be identical at all times.

Cache vaulting: use a set

of physical disks to dump the contents of cache during power failure. This is

called cache vaulting and the disks are called vault drives. When

power is restored, data from these disks is written back to write cache and

then written to the intended disks.

Server Flash Caching

Technology:

Server flash-caching technology uses intelligent caching software and PCI

Express (PCIe) flash card on the host. This dramatically improves application

performance by reducing latency and accelerates throughput. Server

flash-caching technology works in both physical and virtual environments and

provides performance acceleration for read-intensive workloads. This technology

uses minimal CPU and memory resources from the server by offloading flash

management onto the PCIe card.

Storage Provisioning : provisioning

is the process of assigning storage resources to hosts based on capacity,

availability, and performance requirements of applications running on the

hosts. Storage provisioning can be performed in two ways: traditional and

virtual.

It

is highly recommend that the RAID set be created from drives of the same type,

speed, and capacity to ensure maximum usable capacity, reliability, and

consistency in performance. For example, if drives of different capacities are

mixed in a RAID set, the capacity of the smallest drive is used from each disk

in the set to make up the RAID set’s overall capacity.

Logical units are created from the RAID sets by

partitioning (seen as slices of the RAID set) the available capacity into

smaller units. Logical units are spread across all the physical disks that

belong to that set. Each logical unit created from the RAID set is assigned a

unique ID, called a logical unit number(LUN). LUNs hide the organization

and composition of the RAID set from the hosts. LUNs created by traditional

storage provisioning methods are also referred to as thick LUNs to

distinguish them from the LUNs created by virtual provisioning methods.

When

a LUN is configured and assigned to a non-virtualized host, a bus scan is

required to identify the LUN. This LUN appears as a raw disk to the operating

system. To make this disk usable, it is formatted with a file system and then

the file system is mounted.

In

a virtualized host environment, the LUN is assigned to the hypervisor, which

recognizes it as a raw disk. This disk is configured with the hypervisor file

system, and then virtual disks are created on it. Virtual disks are

files on the hypervisor file system. The virtual disks are then assigned to

virtual machines and appear as raw disks to them. To make the virtual disk

usable to the virtual machine, similar steps are followed as in a

non-virtualized environment. Here, the LUN space may be shared and accessed

simultaneously by multiple virtual machines.

Virtual

machines can also access a LUN directly on the storage system. In this method

the entire LUN is allocated to a single virtual machine. Storing data in this

way is recommended when the applications running on the virtual machine are response-time

sensitive, and sharing storage with other virtual machines may impact their

response time.

LUN Expansion – MetaLUN: MetaLUN

is a method to expand LUNs that require additional capacity or performance.

A metaLUN can be created by combining two or more LUNs. A metaLUN consists of a

base LUN and one or more component LUNs. MetaLUNs can be either concatenated

or striped.

All

LUNs in both concatenated and striped expansion must reside on the same

disk-drive type: either all FibreChannel or all ATA.

Virtual provisioning enables

creating and presenting a LUN with more capacity than is physically allocated

to it on the storage array. The LUN created using virtual provisioning is

called a thin LUN to distinguish it from the traditional LUN.

Thin

LUNs do not require physical storage to be completely allocated to them at the

time they are created and presented to a host. Physical storage is allocated to

the host “on-demand” from a shared pool of physical capacity. Shared pools can

be homogeneous (containing a single drive type) or heterogeneous (containing

mixed drive types, such as flash, FC, SAS, and SATA drives).

Virtual

provisioning improves storage capacity utilization and simplifies storage

management.

Both

traditional and thin LUNs can coexist in the same storage array. Based on the

requirement, an administrator may migrate data between thin and traditional

LUNs.

LUN Masking: is a process

that provides data access control by defining which LUNs a host can access. The

LUN masking function is implemented on the storage array. This ensures that

volume access by hosts is controlled appropriately, preventing unauthorized or

accidental use in a shared environment.

Intelligent

storage systems generally fall into one of the following two categories:

high-end storage systems, and midrange storage systems. High-end storage systems, referred to as active-active arrays,

are generally aimed at large enterprise applications. These systems are

designed with a large number of controllers and cache memory. An active-active

array implies that the host can perform I/Os to its LUNs through any of the

available controllers.

Midrange

storage systems are also referred to as active-passive

arrays and are best suited for small-and medium-sized enterprise

applications.

In

an active-passive array, a host can perform I/Os to a LUN only through the

controller that owns the LUN. The host can perform reads or writes to the LUN

only through the path to controller A because controller A is the owner of that

LUN. The path to controller B remains passive and no I/O activity is performed

through this path.

Midrange

storage systems are typically designed with two controllers, each of which

contains host interfaces, cache, RAID controllers, and interface to disk

drives.

Practical

examples of Intelligent storage systems are EMC VNX, EMC Symmetrix VMAX

The EMC VNX storage array

is EMC’s midrange storage offering. EMC VNX is a unified storage platform that

offers storage for block, file, and object-based data within the same array.

EMC Symmetrix is EMC’s

high-end storage offering. The EMC

Symmetrix offering includes Symmetrix Virtual Matrix (VMAX) series.

•Incrementally

scalable to 2,400 disks

•Supports

up to 8 VMAX engines (Each VMAX engine contains a pair of directors)

•Supports

flash drives, fully automated storage tiering(FAST), virtual provisioning, and

Cloud computing

•Supports

up to 1 TB of global cache memory

•Supports

FC, iSCSI, GigE, and FICON for host connectivity

•Supports

RAID levels 1, 1+0, 5, and 6

•Supports

storage-based replication through EMC TimeFinder and EMC SRDF

•Highly

fault-tolerant design that allows non-disruptive upgrades and full

component-level redundancy with hot-swappable replacements

Module 5 - Fibre Channel Storage

Area Network (FC SAN)

Overview of FC SAN

Direct-attached

storage (DAS) is often referred to as a stovepipe storage environment. Hosts

“own” the storage, and it is difficult to manage and share resources on these

isolated storage devices. Efforts to organize this dispersed data led to the

emergence of the storage area network (SAN).

What is SAN

SAN

is a high-speed, dedicated network of servers and shared storage devices. It

enables storage consolidation and enables storage to be shared across multiple

servers. This improves the utilization of storage resources compared to

direct-attached storage architecture. SAN also enables organizations to connect

geographically dispersed servers and storage.

Common

SAN deployments are Fibre Channel (FC) SAN and IP SAN. Fibre Channel SAN uses

Fibre Channel protocol for the transport of data, commands, and status

information between servers (or hosts) and storage devices. IP SAN uses

IP-based protocols for communication.

Understanding Fibre

Channel: Fibre Channel is a

high-speed network technology that runs on high-speed optical fiber cables and

serial copper cables.

High

data transmission speed is an important feature of the FC networking

technology. In comparison with Ultra-SCSI that is commonly used in DAS

environments, FC is a significant leap in storage networking technology. The

latest FC implementations of 16 GFC (Fibre Channel) offers a throughput of 3200

MB/s (raw bit rates of 16 Gb/s), whereas Ultra640 SCSI is available with a

throughput of 640 MB/s. Credit-based flow control mechanism in FC delivers data

as fast as the destination buffer is able to receive it, without dropping

frames. Also FC has very little transmission overhead. The FC architecture is

highly scalable, and theoretically, a single FC network can accommodate approximately

15 million devices.

Note: FibRE refers to the protocol, whereas fibber refers to a

media.

Components

of FC SAN : Servers and storage are the end points or devices in the

SAN (called ‘nodes’). FC SAN infrastructure consists of node ports, cables,

connectors, interconnecting devices (such as FC switches or hubs), along with

SAN management software.

Node Ports: Each node requires one or more ports to

provide a physical interface for communicating with other nodes. These ports

are integral components of host adapters, such as HBA, and storage front-end

controllers or adapters. In an FC environment a port operates in full-duplex

data transmission mode with a transmit (Tx)

link and a receive (Rx) link.

Cables: SAN

implementations use optical fiber

cabling. Copper can be used for

shorter distances for back-end connectivity because it provides acceptable

signal-to-noise ratio for distances up to 30 meters.

There

are two types of optical cables: multimode

and single-mode. Multimode fiber

(MMF) cable carries multiple beams of light projected at different angles

simultaneously onto the core of the cable. Based on the bandwidth, multimode

fibers are classified as OM1 (62.5μm core), OM2 (50μm core), and

laser-optimized OM3 (50μm core). An MMF cable is typically used for short

distances because of signal degradation (attenuation) due to modal dispersion.

Single-mode

fiber (SMF) carries a single ray of light projected at the center of the core.

These cables are available in core diameters of 7 to 11 microns; the most common

size is 9 microns. single-mode provides minimum signal attenuation over maximum

distance (up to 10 km).

MMFs

are generally used within data centers for shorter distance runs, whereas SMFs

are used for longer distances.

Connectors : A connector

is attached at the end of a cable to enable swift connection and disconnection

of the cable to and from a port. A Standard connector (SC) and a Lucent connector (LC)

are two commonly used connectors for fiber optic cables. Straight Tip (ST) is another fiber-optic connector,

which is often used with fiber patch panels.

Interconnecting Devices

:

FC hubs, switches, and directors are the interconnect devices commonly used in

FC SAN. Hubs provide limited connectivity and scalability. Hubs are used as

communication devices in FC-AL implementations. Because of the availability of

low-cost and high-performance switches, hubs are no longer used in FC SANs.

Switches

are more intelligent than hubs and directly route data from one physical port

to another. Therefore, nodes do not share the data path. Instead, each node has

a dedicated communication path.

Directors are high-end switches with a higher port count and

better fault-tolerance capabilities.

Switches are available with a fixed port count or with modular design. In a modular switch, the port count is increased by installing additional port cards to open slots. The architecture of a director is always modular.

Switches are available with a fixed port count or with modular design. In a modular switch, the port count is increased by installing additional port cards to open slots. The architecture of a director is always modular.

SAN Management Software: SAN

management software manages the interfaces between hosts, interconnect devices,

and storage arrays. The software provides a view of the SAN environment and

enables management of various resources from one central console.

FC Interconnectivity options: The FC

architecture supports three basic interconnectivity options: point-to-point,

fibre channel arbitrated loop (FC-AL),

and fibre channel switched fabric (FC-SW).

Point-to-point

connectivity is the simplest FC configuration—two devices are connected

directly to each other.

The

point-to-point configuration offers limited connectivity, because only two

devices can communicate with each other at a given time. Standard DAS uses

point-to-point connectivity

In the FC-AL

connectivity configuration, devices are attached to a shared loop. FC-AL

has the characteristics of a token ring topology and a physical star topology.

In FC-AL, each device contends with other devices to perform I/O operations.

Devices on the loop must “arbitrate” to gain control of the loop. At any given

time, only one device can perform I/O operations on the loop.

FC-AL

uses only 8-bits of 24-bit Fibre Channel addressing (the remaining 16-bits are

masked) and enables the assignment of 127 valid addresses to the ports. Hence,

it can support up to 127 devices on a loop. One address is reserved for

optionally connecting the loop to an FC switch port. Therefore, up to 126 nodes

can be connected to the loop.

FC-SW connectivity is also

referred to as fabric connect. A fabric is a logical space in which all nodes

communicate with one another in a network. This virtual space can be created

with a switch or a network of switches. Each port in a fabric has a unique

24-bit Fibre Channel address for communication.

In

a switched fabric, the link between any two switches is called an interswitch link

(ISL).

Unlike

a loop configuration, a FC-SW network provides dedicated path and scalability.

The addition or removal of a device in a switched fabric is minimally

disruptive; it does not affect the ongoing traffic between other devices.

Ports types in Switched

Fabric

: Ports in a

switched fabric can be one of the following types:

•N_Port:An

end point in the fabric. This port is also known as the node port. Typically,

it is a host port (HBA) or a storage array port that is connected to a switch

in a switched fabric.

•E_Port:A

port that forms the connection between two FC switches. This port is also known

as the expansion port. The E_Porton an FC switch connects to the E_Portof

another FC switch in the fabric ISLs.

•F_Port:A

port on a switch that connects an N_Port. It is also known as a fabric port.

•G_Port:A

generic port on a switch that can operate as an E_Portor an F_Portand

determines its functionality automatically during initialization.

Fibre Channel (FC)

Architecture : Traditionally, host computer operating systems have

communicated with peripheral devices over channel connections, such as

ESCON and SCSI.

The

FC architecture represents true channel/network integration and captures some

of the benefits of both channel and network technology. FC SAN uses the Fibre

Channel Protocol (FCP) that provides both channel speed for data transfer with

low protocol overhead and scalability of network technology.

Fibre

Channel provides a serial data transfer interface that operates over copper

wire and optical fiber. FCP is the implementation of SCSI over an FC network.

In FCP architecture, all external and remote storage devices attached to the

SAN appear as local devices to the host operating system.

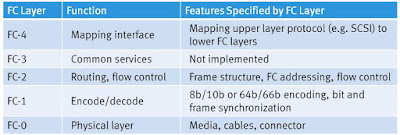

FC Protocol Stack : FCP defines

the communication protocol in five layers: FC-0 through FC-4 (except FC-3

layer, which is not implemented).

FC Addressing in

Switched Fabric : An FC address is dynamically assigned to Node when a node port logs on to the

fabric. The FC address has a distinct format, as shown

The

first field of the FC address contains the domain

ID of the switch. A Domain ID is a unique number provided to each switch in

the fabric. Although this is an 8-bit field, there are only 239 available addresses for domain ID

because some addresses are deemed special and reserved for fabric management

services. For example, FFFFFC is reserved for the name server, and FFFFFE is

reserved for the fabric login service.

The

area ID is used to identify a group

of switch ports used for connecting nodes. An example of a group of ports with

common area ID is a port card on the switch. The last field, the port ID,

identifies the port within the group.

Therefore,

the maximum possible number of node ports in a switched fabric is calculated

as:

239 domains X 256 areas X 256 ports = 15,663,104

World Wide Name (WWN) : Each

device in the FC environment is assigned a 64-bit

unique identifier called the World Wide Name (WWN). The Fibre Channel

environment uses two types of WWNs: World Wide Node Name (WWNN) and World Wide

Port Name (WWPN). Unlike an FC address, which is assigned dynamically, a WWN is a static name for each node on

an FC network. WWNs are similar to the Media Access Control (MAC) addresses

used in IP networking. WWNs are burned into the hardware or assigned through

software.

Several

configuration definitions in a SAN use WWN for identifying storage devices and HBAs.

The name server in an FC environment keeps the association of WWNs to

the dynamically created FC addresses for nodes.

Structure and

Organization of FC Data : In an FC network, data transport is analogous

to a conversation between two people, whereby a frame represents a word, a sequence

represents a sentence, and an exchange

represents a conversation.

Exchange : An

exchange operation enables two node ports to identify and manage a set of

information units. The structure of these information units is defined in the

FC-4 layer. This unit maps to a sequence. An exchange is composed of one or

more sequences.

Sequence:A sequence refers to a contiguous set of frames

that are sent from one port to another. A sequence corresponds to an

information unit, as defined by the ULP.

Frame:A frame is the fundamental unit of data

transfer at Layer 2. An FC frame consists of five parts: start of frame (SOF),

frame header, data field, cyclic redundancy check (CRC), and end of frame

(EOF).

Fabric Services : All FC switches, regardless of the manufacturer,

provide a common set of services as defined in the FibreChannel standards.

These services are available at certain predefined addresses.

Login Types in Switched

Fabric : Fabric

services define three login types:

Fabric login (FLOGI):

Performed between an N_Portand an F_Port. To log on to the fabric, a node sends

a FLOGI frame with the WWNN and WWPN parameters to the login service at the

predefined FC address FFFFFE (Fabric Login Server).

Port login (PLOGI):

Performed between two N_Portsto establish a session. The initiator N_Portsends

a PLOGI request frame to the target N_Port, which accepts it.

Process login (PRLI):

Also performed between two N_Ports. This login relates to the FC-4 ULPs, such

as SCSI.

FC SAN Topologies and

Zoning :

Mesh Topology : A mesh

topology may be one of the two types: full

mesh or partial mesh. In a full

mesh, every switch is connected to every other switch in the topology. A full

mesh topology may be appropriate when the number of switches involved is small.

In a full mesh topology, a maximum of one ISL or hop is required for

host-to-storage traffic. However, with the increase in the number of switches,

the number of switch ports used for ISL also increases. This reduces the

available switch ports for node connectivity.

Core-Edge Topology : The

core-edge fabric topology has two types of switch tiers. The edge tier is

usually composed of switches and offers an inexpensive approach to adding more

hosts in a fabric. Each switch at the edge tier is attached to a switch at the

core tier through ISLs.

The core tier is usually composed of directors that ensure high

fabric availability. In addition, typically all traffic must either traverse

this tier or terminate at this tier. In this configuration, all storage devices

are connected to the core tier, enabling host-to-storage traffic to traverse

only one ISL.

In

core-edge topology, the edge-tier switches are not connected to each other.

The

core-edge topology provides higher scalability than mesh topology and provides

one-hop storage access to all servers in the environment

Zoning : Zoning is

an FC switch function that enables node ports within the fabric to be logically

segmented into groups and communicate with each other within the group.

Each

Zone comprises Zone members (HBA and array ports)

Whenever

a change takes place in the name server database, the fabric controller sends a

Registered State Change Notification (RSCN)

to all the nodes impacted by the change. If zoning is not configured, the

fabric controller sends an RSCN to all the nodes in the fabric.

In

the presence of zoning, a fabric sends the RSCN to only those nodes in a zone

where the change has occurred.

Zoning also provides access

control, along with other access control mechanisms, such as LUN masking.

Zoning provides control by allowing only the members in the same zone to

establish communication with each other.

Multiple

zone sets may be defined in a fabric, but only one zone set can be active at a

time.

Types of Zoning : Zoning can

be categorized into three types:

Port

zoning: Uses the thephysical address of switch ports to define zones. In

port zoning, access to node is determined by the physical switch port to which

a node is connected.

WWN

zoning: Uses World Wide Names to define zones. The zone members are the

unique WWN addresses of the HBA and its targets (storage devices).

Mixed

zoning: Combines the qualities of both WWN zoning and port zoning.

Virtualization in SAN :

Block-level storage virtualization: aggregates block storage devices (LUNs)

and enables provisioning of virtual storage volumes, independent of the

underlying physical storage. A virtualization layer, which exists at the SAN,

abstracts the identity of physical storage devices and creates a storage pool

from heterogeneous storage devices.

Virtual

volumes are created from the storage pool and assigned to the hosts. Instead of

being directed to the LUNs on the individual storage arrays, the hosts are

directed to the virtual volumes provided by the virtualization layer.

Typically,

the virtualization layer is managed via a dedicated virtualization appliance

to which the hosts and the storage arrays are connected.

Block-level

storage virtualization also provides the advantage of nondisruptive data

migration. In a traditional SAN environment, LUN migration from one array to

another is an offline event because the hosts needed to be updated to reflect

the new array configuration.

Previously,

block-level storage virtualization provided nondisruptivedata migration only

within a data center. The new generation of block-level storage virtualization

enables nondisruptivedata migration both within and between data centers. It

provides the capability to connect the virtualization layers at multiple data

centers.

Virtual SAN (also

called virtual fabric) : is a logical fabric on an FC SAN, which enables

communication among a group of nodes regardless of their physical location in

the fabric.

Multiple

VSANs may be created on a single physical SAN.

Each

VSAN acts as an independent fabric with its own set of fabric services, such as

name server, and zoning.

VSANs

improve SAN security, scalability, availability, and manageability.

Practical

examples are EMC Connectrix and VPLEX.

The EMC Connectrix family

represents the industry’s most extensive selection of networked storage

connectivity products.

Connectrix

integrates high-speed FC connectivity, highly resilient switching technology,

options for intelligent IP storage networking, and I/O consolidation with

products that support Fibre Channel over Ethernet (FCoE). The connectivity

products offered under the Connectrix brand are: Enterprise directors,

departmental switches, and multi-purpose

switches.

EMC VPLEX : EMC VPLEX is

the next-generation solution for block-level virtualization and data mobility

both within and across datacenters. The VPLEX appliance resides between the

servers and heterogeneous storage devices. It forms a pool of distributed block

storage resources and enables creating virtual storage volumes from the pool.

These virtual volumes are then allocated to the servers. The

virtual-to-physical-storage mapping remains hidden to the servers.

The

VPLEX family consists of three products: VPLEX

Local, VPLEX Metro, and VPLEX Geo.

Module 6 - IP SAN and FCoE

IP SAN : Two primary

protocols that leverage IP as the transport mechanism are Internet SCSI (iSCSI)

and Fibre Channel over IP (FCIP).

Traditional

SAN enables the transfer of block I/O over FibreChannel and provides high

performance and scalability. These advantages of FC SAN come with the

additional cost of buying FC components, such as FC HBA and switches

Technology

of transporting block I/Os over an IP is referred to as IP SAN.

Advantages

of IP SAN are existing n/w infrastructure can be leveraged. Reduced cost

compared to investing in new FC SAN h/w are s/w. Many long-distance disaster

recover solutions already leverage IP-based network. Many robust and mature

security options are available for IP n/w.

IP SAN Protocol: iSCSI

: iSCSI is encapsulation

of SCSI I/O over IP. iSCSI is an IP based protocol that establishes and manages

connections between host and storage over IP.

Components of iSCSI : An

initiator (host) eg iSCSI HBA, target (storage or iSCSI gateway), and an

IP-based network are the key iSCSI components . In an implementation that uses

an existing FC array for iSCSI communication, an iSCSI gateway is used. If an

iSCSI-capable storage array is deployed, then a host with the iSCSI initiator

can directly communicate with the storage array over an IP network.

iSCSI Host Connectivity

Options

: A standard NIC with software iSCSI initiator, a TCP offload engine (TOE) NIC

with software iSCSI initiator, and an iSCSI HBA are the three iSCSI host

connectivity options.

A

standard NIC with a software iSCSI initiator is the simplest and least

expensive connectivity option, but places additional overhead on the host CPU.

A TOE NIC offloads TCP management functions from the host and leaves only the iSCSI functionality to the host processor. Although this solution improves performance, the iSCSI functionality is still handled by a software initiator that requires host CPU cycles

An

iSCSI HBA is capable of providing performance benefits because it offloads the

entire iSCSI and TCP/IP processing from the host processor. The use of an iSCSI

HBA is also the simplest way to boot hosts from a SAN environment via

iSCSI (NIC needs to obtain an IP address before the operating system loads)

iSCSI Topologies :

Native iSCSI :

Two

topologies of iSCSI implementations are native

and bridged. Native topology does

not have FC components. The initiators may be either directly attached to

targets or connected through the IP network.

FC

components are not required for iSCSI connectivity if an iSCSI-enabled array is

deployed.

Bridged iSCSI : Bridged

topology enables the coexistence of FC with IP by providing iSCSI-to-FC

bridging functionality. An external device, called a gateway or a multiprotocol

router, must be used to facilitate the communication between the iSCSI host

and FC storage (does not have iSCSI ports). The gateway converts IP packets to

FC frames and vice versa.

Combining FC and Native

iSCSI

Connectivity: The most common

topology is a combination of FC and native iSCSI. Typically, a storage array

comes with both FC and iSCSI ports that enable iSCSI and FC connectivity in the

same environment. No bridge device needed.

iSCSI Protocol Stack : SCSI is the command protocol that works

at the application layer of the Open

System Interconnection (OSI) model.

iSCSI is the session-layer protocol

that initiates a reliable session between devices that recognize SCSI commands

and TCP/IP.

iSCSI Discovery : An initiator

must discover the location of its targets on the network and the names of the

targets available to it before it can establish a session. This discovery can

take place in two ways: SendTargets discovery or internet Storage Name

Service (iSNS).

In

SendTargets discovery, the initiator is manually configured with the target’s

network portal to establish a discovery session

iSNS

enables automatic discovery of iSCSI devices on an IP network. The initiators

and targets can be configured to automatically register themselves with the

iSNS server.

iSCSI Name : A unique

worldwide iSCSI identifier, known as an iSCSI name, is used to identify the

initiators and targets within an iSCSI network to facilitate communication.

There

are two types of iSCSI names commonly used :

iSCSI

Qualified Name (IQN):An organization must own a registered domain

name to generate iSCSI Qualified Names. A date is included in the name to avoid

potential conflicts caused by the transfer of domain names. An example of an

IQN is iqn.2008-02.com.example:optional_string.

Extended

Unique Identifier (EUI): An EUI is a globally unique identifier based

on the IEEE EUI-64 naming standard. An EUI is composed of the euiprefix

followed by a 16-character hexadecimal name, such as eui.0300732A32598D26.

IP SAN Protocol: FCIP: FCIP is a

tunneling protocol that enables distributed FC SAN islands to be interconnected

over the existing IP-based networks. In FCIP FC frames are encapsulated onto

the IP payload. An FCIP implementation is capable to merge interconnected

fabrics into a single fabric.

Majority

of FCIP implementations today use switch-specific features such as IVR (Inter-VSAN Routing) or FCRS (Fibre Channel Routing Services)

to create a tunnel. In this manner, traffic may be routed between specific

nodes without actually merging the fabrics.

FCIP

is extensively used in disaster recovery implementations in which data

is duplicated to the storage located at a remote site.

In

an FCIP environment, an FCIP gateway

is connected to each fabric via a standard FC connection. The fabric treats

these gateways as layer 2 fabric switches. An IP address is assigned to the

port on the gateway, which is connected to an IP network.

FCIP Protocol Stack:

Fibre Channel over

Ethernet ( FCoE ) : Data centers typically have multiple networks

to handle various types of I/O traffic—for example, an Ethernet network for TCP/IP communication and an FC network for FC communication.

The

need for two different kinds of physical network infrastructure increases the

overall cost and complexity of data center operation.

FibreChannel

over Ethernet (FCoE) protocol provides consolidation of LAN and SAN traffic

over a single physical interface infrastructure. FCoE uses the Converged

Enhanced Ethernet (CEE) link (10 Gigabit Ethernet) to send FC frames

over Ethernet.

Components of an FCoE

Network: CNA : Converged Network Adapters (CNAs). A CNA replaces both HBAs and NICs in the server and consolidates

both the IP and FC traffic.

CNA

offloads the FCoE protocol processing task from the server, thereby freeing the

server CPU resources for application processing. A CNA contains separate

modules for 10 Gigabit Ethernet, Fibre Channel, and FCoE Application Specific

Integrated Circuits (ASICs).

Cable

: Currently two options are available for FCoE cabling: Copper based Twinax and standard fiber optical cables. A Twinax cable is composed of two pairs of

copper cables covered with a shielded casing. The Twinax cable can transmit

data at the speed of 10 Gbps over

shorter distances up to 10 meters.

Twinax cables require less power and are less expensive than fiber optic

cables.

The

Small Form Factor Pluggable Plus (SFP+)

connector is the primary connector used for FCoE links and can be used with

both optical and copper cables.

FCoE Switch: An FCoE

switch has both Ethernet switch and Fibre Channel switch functionalities. The

FCoE switch has a Fibre Channel Forwarder (FCF),

Ethernet Bridge, and set of Ethernet ports and optional FC ports.

FCoE Frame mapping: The FC stack

consists of five layers: FC-0 through FC-4. Ethernet is typically considered as a set of protocols that operates at the

physical and data link layers in the seven-layer OSI stack. The FCoE protocol

specification replaces the FC-0 and FC-1 layers of the FC stack with Ethernet.

This provides the capability to carry the FC-2 to the FC-4 layer over the

Ethernet layer.

To

maintain good performance, FCoE must use jumbo frames to prevent a Fibre

Channel frame from being split into two Ethernet frames.

Converged Enhanced

Ethernet

: Converged Enhanced Ethernet (CEE) or lossless Ethernet provides a new

specification to the existing Ethernet standard that eliminates the lossy

nature of Ethernet.

Lossless

Ethernet requires following functionalities

•Priority-based flow control (PFC):

created 8 virtual links on single physical link, uses PAUSE capability of

Ethernet for reach virtual link for lossless

•Enhanced transmission selection (ETS) : Allocates bandwidth to

different traffic classes, such as LAN, SAN, and Inter Process Communication

(IPC). When a particular class of traffic does not use its allocated bandwidth,

ETS enables other traffic classes to use the available bandwidth.

•Congestion notification (CN)

: Congestion

notification provides end-to-end congestion management for protocols, such as

FCoE.

•Data

center bridging exchange protocol (DCBX)

Module 7 - Network-Attached Storage (NAS).

File Sharing

Environment : File sharing, enables users to share files with other users

. A user who creates the file (the creator or owner of a file) determines the

type of access (such as read, write, execute, append, delete) to be given to

other users. When multiple users try to access a shared file at the same time,

a locking scheme is required to maintain data integrity and, at the same time,

make this sharing possible.

Some

examples of file-sharing methods are; File Transfer Protocol (FTP), Distributed File System (DFS), client-server models that use

file-sharing protocols such as Network File System NFS and Common Internet File System CIFS, and the peer-to-peer (P2P)

model.

NAS

is a dedicated, high-performance file sharing and storage device. NAS enables

its clients to share files over an IP network. NAS uses network and

file-sharing protocols to provide access to the file data. These protocols

include TCP/IP for data transfer, and Common Internet File System (CIFS) and

Network File System (NFS) for network file service. NAS enables both UNIX and

Microsoft Windows users to share the same data seamlessly.

A

NAS device uses its own operating

system and integrated hardware and software components to meet specific

file-service needs. Its operating system is optimized for file I/O and,

therefore, performs file I/O better than a general-purpose server

Components of NAS : A NAS device

has two key components: NAS head and

storage. In some NAS

implementations, the storage could be external to the NAS device and shared

with other hosts.

Common Internet File

System (CIFS) : is a client-server application protocol that enables

client programs to make requests for files and services on remote computers

over TCP/IP. It is a public, or open, variation of Server Message Block (SMB) protocol.

It

uses file and record locking to prevent users from overwriting the work of

another user on a file or a record. It is Stateful

protocol CIFS server maintains connection information regarding every

connected client.

Users

refer to remote file systems with an easy-to-use file-naming scheme:

\\server\share

or \\servername.domain.suffix\share.

Network File System

(NFS)

is a client-server protocol for file sharing that is commonly used on UNIX

systems. It uses Remote Procedure Call (RPC) as a method of inter-process

communication between two computers.

NFS

(NFSv3 and NFSv2) is a stateless

protocol and uses UDP as

transport layer protocol (or TCP for NSFv3). NFS version 4 (NFSv4): Uses TCP

and is based on a statefulprotocol design. It offers enhanced security.

NAS I/O Operation: The NAS operating system keeps track of the

location of files on the disk volume and converts client file I/O into

block-level I/O to retrieve data and converts back to file I/O for client.

NAS Implementation –

Unified NAS: common NAS implementations are unified, gateway, and scale-out.

The unified NAS

consolidates NAS-based (file-level) and SAN-based (block-level) data access within

a unified storage platform. It supports both CIFS and NFS protocols for

file access and iSCSIand FC protocols for block level access. A unified NAS

contains one or more NAS heads and storage in a single system. The storage may

consist of different drive types, such as SAS, ATA, FC, and flash drives, to

meet different workload requirements.

Gateway NAS : device

consists of one or more NAS heads and uses external and independently

managed storage. Management functions in this type of solution are more complex

than those in a unified NAS environment because there are separate

administrative tasks for the NAS head and the storage. A gateway solution can

use the FC infrastructure, such as switches and directors for accessing

SAN-attached storage arrays or direct-attached storage arrays.

The

gateway NAS is more scalable compared to unified NAS because NAS heads and

storage arrays can be independently scaled up when required. NAS gateway and

the storage system in a gateway solution is achieved through a traditional

FC SAN.

Scale-out NAS

implementation: Pools multiple

nodes together in a cluster. A node may consist of either the NAS head or

storage or both. The cluster performs the NAS operation as a single entity. A

scale-out NAS provides the capability to scale its resources by simply adding

nodes to a clustered NAS architecture. The cluster works as a single NAS device

and is managed centrally.

Scale-out

NAS creates a single file system that runs on all nodes in the cluster. All

information is shared among nodes, so the entire file system is

accessible by clients connecting to any node in the cluster. Scale-out NAS stripes

data across all nodes in a cluster along with mirror or parity

protection. Scale-out NAS clusters use separate internal and external

networks for back-end and front-end connectivity, respectively. An internal

network (uses high-speed networking technology, such as InfiniBand or Gigabit

Ethernet.) provides connections for intracluster communication, and an external

network connection enables clients to access and share file data.

File-level

Virtualization : File-level virtualization eliminates the dependencies

between the data accessed at the file level and the location where the files

are physically stored. It creates a logical pool of storage, enabling users to

use a logical path, rather than a physical path, to access files. A global

namespace is used to map the logical path of a file to the physical path names.

File-level virtualization enables the movement of files across NAS devices,

even if the files are being accessed.

Concepts in Practice:

EMC Isilon : Scale-out

NAS solution. Isilon has a specialized operating system called OneFS that enables the scale-out NAS

architecture.

EMC VNX Gateway:

Gateway NAS Solution: The VNX Series Gateway contains one or more

NAS heads, called X-Blades, that access external storage arrays, such as

Symmetrix and block-based VNX via SAN. The VNX Gateway supports both pNFS and

EMC patented Multi-Path File System (MPFS) protocols.

Module 8 - Object-based and the Unified Storage

Object-based Storage: more than 90

percent of data generated is unstructured. Traditional Solutions NAS is

inefficient to handle growth as there is high overhead on NAS to managing large

number of permission and nested directories.

NAS

also manages large amounts of metadata generated by hosts, storage

systems, and individual applications. Typically this metadata is stored as part

of the file and distributed throughout the environment. This adds to the

complexity and latency in searching and retrieving files.

These

changes demanded a smarter approach Object-based storage is a way to

store file data in the form of objects on flat

address space based on its content and

other attributes rather than the name and location.

OSD

uses flat address space to store data. Therefore, there is no hierarchy of

directories and files; as a result, a large number of objects can be stored in

an OSD system.

Each

object stored in the system is identified by a unique ID called the object ID. The object ID is

generated using specialized algorithms such as hash function on the data

and guarantees that every object is uniquely identified.

Components of Object-based

Storage device: The OSD system is typically composed of three key

components: nodes, private network, and storage.

The

OSD system is composed of one or more nodes. A node is a server that

runs the OSD operating environment and

provides services to store, retrieve, and manage data in the system. The OSD

node divides the file into two parts: user data and metadata. The OSD node has

two key services: metadata service and storage service.

The

metadata service is responsible for generating the object ID from the contents

(may also include other attributes of data) of a file. It also maintains the

mapping of the object IDs and the file system namespace.

The

storage service manages a set of disks on which the user data is stored. OSD

typically uses low-cost and high-density disk drives to store the objects.

Traditional

storage solutions, such as SAN, and NAS, do not offer all these benefits as a

single solution. Object-based storage combines benefits of both the worlds. It

provides platform and location independence, and at the same time, provides

scalability, security and data-sharing capabilities.

These

capabilities make OSD a strong option for cloud-based

storage. Cloud service providers can leverage OSD to offer

storage-as-a-service. OSD supports web service access via representational

state transfer (REST) and simple

object access protocol (SOAP).

REST and SOAP APIs can be easily integrated with business applications that

access OSD over the web.

Content Addressed Storage

(CAS): A

data archival solution is a

promising use case for OSD.

Content

Addressed storage (CAS) is a special type of object-based storage device

purposely built for storing fixed

content.

The

stored object is assigned a globally unique address, known as a content address (CA). This

address is derived from the object’s binary

representation. Data access in CAS differs from other OSD devices. In CAS,

the application server can access the CAS device only via the CAS API running on the application

server.

Unified Storage : Deploying

disparate storage solutions ( NAS, SAN, and OSD) adds management complexity,

cost and environmental overheads. An ideal solution would be to have an

integrated storage solution that supports block, file, and object access.

It

supports multiple protocols for data access and can be managed using a single

management interface.

Components

of Unified Storage: A unified storage system consists of following key

components: storage controller, NAS head, OSD node, and storage.

The

storage controller provides block-level access to application

servers through iSCSI, FC, or FCoE protocols. It contains iSCSI, FC, and FCoE front-end

ports for direct block access.

In

a unified storage system, block, file, and object requests to the storage

travel through different I/O paths. Block I/O request (FC, iSCSI, or

FCoE) File I/O request (NFS or CIFS ) Object I/O request (REST or

SOAP)

Concepts in Practice : EMC

Atmos, EMC VNX, and EMC Centera.

EMC Atmos : object-based storage for unstructured

data. Atmos can be deployed in two ways: as a purpose-built hardware appliance

or as software in VMware environments.

EMC Centera : EMC Centera

is a simple, affordable, and secure repository for information archiving. EMC

Centera is designed and optimized specifically to deal with the storage and

retrieval of fixed content by meeting performance, compliance, and regulatory

requirements.

EMC VNX : EMC VNX is

a unified storage platform that

consolidates block, file, and object access in one solution. It implements a

modular architecture that integrates hardware components for block, file, and

object access. EMC VNX delivers file access (NAS) functionality via X-Blades

(Data Movers) and block access functionality via storage processors. Optionally

it offers object access to the storage using EMC Atmos Virtual Edition (Atmos

VE).

Module 9- Introduction to Business Continuity

Information

is an organization’s most important asset. Cost of unavailability of

information to an organization is greater than ever.

Business Continuity: Is a

process that prepares for, responds to, and recovers from a system outage that

can adversely affects business operations. In a virtualized environment, BC

solutions need to protect both physical and virtualized resources.

It

involves proactive measures, such as business impact analysis, risk

assessments, BC technology solutions deployment (backup and replication), and

reactive measures, such as disaster recovery and restart, to be invoked in the

event of a failure.

Information

Availability: It is the ability of an IT infrastructure to function

according to business expectations, during its specific time of operation.

Information

Availability can be defined with help of

·

Accessibility: Info should be accessible to

right user when required.

·

Reliability : Information should be reliable

and correct in all aspects

·

Timeliness: Defines the time window during

which information must be accessible.

Causes of Information

Unavailability: Various planned and unplanned incidents result in

information unavailability

·

Unplanned Outages (20%) – Failure like Database

corruption, component failure, Human error

·

Planned Outages (80%) – Competing workloads- backup,

reporting, include installation, maintenance of new hardware, software upgrades

or patches, and refresh/migration

·

Disaster (<1% of occurrences) – Natural or

man made – Flood, Fire, Earthquake

Impact of Downtime:

Information unavailability or downtime results in loss of productivity, loss of

revenue, poor financial performance, and damages to reputation.

The

business impact of downtime is the sum of all losses sustained as a result of a

given disruption. An important metric, average

cost of downtime per hour, provides a key estimate

Average

cost of downtime per hour = average productivity loss per hour + average

revenue loss per hour

Where:

Productivity

loss per hour = (total salaries and benefits of all employees per week) /

(average number of working hours per week)

Average

revenue loss per hour = (total revenue of an organization per week) / (average

number of hours per week that an organization is open for business)

Measuring Information

Availability : MTBF and MTTR

Mean

Time Between Failure (MTBF): It is the average time available for a system

or component to perform its normal operations between failures. MTBF is calculated

as: Total uptime/Number of failures

Mean

Time To Repair (MTTR):It is the average time required to repair a

failed component. MTTR is calculated as: Total

downtime/Number of failures

IA = MTBF/ (MTBF+MTTR)

or IA= uptime/ (uptime + downtime)

Availability

Measurement – Levels of ‘9s’ availability.

For example, a service that is said to be “five 9s available” is available for

99.999 percent of the scheduled time in a year (24 ×365).

BC terminologies and BC

planning :

Disaster

recovery: This

is the coordinated process of restoring systems, data, and the infrastructure

required to support ongoing business operations after a disaster occurs.

It is the process of restoring a previous copy of the data and applying logs or

other necessary processes to that copy to bring it to a known point of

consistency. Generally implies use of Backup technologies

Disaster

restart: This

is the process of restarting business operations with mirrored consistent

copies of data and applications. Generally implies use of replication

technologies.

Recovery-Point

Objective (RPO): This is the point-in-time to which systems and

data must be recovered after an outage. It defines the amount of data loss that

a business can endure.

Based

on the RPO, organizations plan for the frequency with which a backup or replica

must be made. Example

RPO

of 24 hours:Backups are created at an offsite tape library every

midnight. The corresponding recovery strategy is to restore data from the set

of last backup tapes.

RPO

of 1 hour: Shipping database logs to the remote site every hour. The

corresponding recovery strategy is to recover the database to the point of the

last log shipment.

RPO

in the order of minutes: Mirroring data asynchronously to a remote site.

RPO

of zero: Mirroring

data synchronously to a remote site.

Recovery-Time

Objective (RTO): The time within which systems and applications

must be recovered after an outage. It defines the amount of downtime that a

business can endure and survive. Some examples of RTOs

RTO

of 72 hours:Restore from tapes available at a cold site.

RTO

of 12 hours:Restore from tapes available at a hot site.

RTO

of few hours:Use disk-based backup technology, which gives faster

restore than a tape backup.

RTO

of a few seconds:Cluster production servers with bidirectional

mirroring, enabling the applications to run at both sites simultaneously.

BC Planning Lifecycles: The BC

planning lifecycle includes five stages:

1. Establishing objectives 2.

Analyzing 3. Designing and developing 4.

Implementing

5. Training, testing,

assessing, and maintaining

Business Impact

Analysis: A business impact analysis (BIA)

identifies which business units, operations, and processes are essential to the

survival of the business. It evaluates the financial, operational, and service

impacts of a disruption to essential business processes

Based

on the potential impacts associated with downtime, businesses can prioritize

and implement countermeasures to mitigate the likelihood of such disruptions.

BC Technology Solutions

:

Solutions that enable BC are

•Resolving single points of failure

•Multipathing software

•Backup and replication

Single points of

failure :refers

to the failure of a component that can terminate the availability of the entire

system or IT service. To mitigate single points of failure, systems are

designed with redundancy, such that the system fails only if all the components

in the redundancy group fail.

e.g.

Configuration of NIC teaming at a

server allows protection against single physical NIC failure.

Multipathing software: Configuration

of multiple paths increases the data availability through path failover.

Multiple paths to data also improve I/O performance through load balancing

among the paths. Multipathing software intelligently recognizes and manages the

paths to a device by sending I/O down the optimal path based on the load

balancing and failover policy setting for the device. In a virtual environment,

multipathing is enabled either by using the hypervisor’s built-in capability or

by running a third-party software module, added to the hypervisor.

Concept in Practice : EMC

PowerPath : EMC PowerPath is host-based multipathing software. Every

I/O from the host to the array must pass through the PowerPath software

Module 10 - Backup and Archive

A

backup is an additional copy of production data, created and retained

for the sole purpose of recovering lost or corrupted data and also for

regulatory requirements compliance.

Backups

are performed to serve three purposes: disaster recovery, operational recovery,

and archival.

Note:

Backup

window is the period during which a source is available for performing a data

backup

Backup Granularity : Backup

granularity depends on business needs and the required RTO/RPO.

backups

can be categorized as full, incremental, and cumulative (or differential).

Incremental backup copies the

data that has changed since the last full or incremental backup, whichever has

occurred more recently. This is much faster than a full backup.

but

takes longer to restore.

Cumulative backup copies the

data that has changed since the last full backup. This method takes longer than

an incremental backup but is faster to restore

Another

way to implement full backup is synthetic

(or constructed) backup. It is usually created from the

most recent full backup and all the incremental backups performed after that

full backup. This backup is called synthetic because the backup is not

created directly from production data.It enables a full backup copy to be

created offline without disrupting the I/O operation on the production volume.

Restore from

Incremental backup: The process of restoration from an incremental

backup requires the last full backup and all the incremental backups available

until the point of restoration. It takes less time to backup , less files and

storage and longer restore time.

Restore from cumulative

backup: More

files to be backed up, taks more time to backup and more storage space and

faster recovery b’coz only last full backup and last cumulative back up must be

applied

Backup Architecture : A backup

system commonly uses the client-server architecture with a backup server and

multiple backup clients.

The backup server manages the

backup operations and maintains the backup catalog, which contains

information about the backup configuration and backup metadata.

The role of a backup

client

is to gather the data that is to be backed up and send it to the storage node.

The storage node (media

server) is responsible for writing the data, to the backup device. In a

backup environment, a storage node is a host that controls backup

devices. In many cases, the storage node is integrated with the backup

server, and both are hosted on the same physical platform

When

a backup operation is initiated, significant network communication takes place

between the different components of a backup infrastructure. The backup

operation is typically initiated by a server, but it can also be initiated by a

client.

After

the data is backed up, it can be restored when required. A restore process must

be manually initiated from the client

Backup Methods: Hot

backup or online (application up and running, with data being accessed,

open file agent can be used to backup open files) and

cold

backup or Offline ( application is shutdown)are the two methods

deployed for backup. They are based on the state of the application when the

backup is performed.

In

a disaster recovery environment, bare-metal

recovery (BMR) refers to a backup in which OS, hardware, and

application configurations are appropriately backed up for a full system

recovery.

Some

BMR technologies—for example server configuration backup (SCB)—can recover a server even onto dissimilar hardware.

Server configuration

backup (SCB) : The process of system recovery involves reinstalling the

operating system, applications, and server settings and then recovering the

data. During a normal data backup operation, server configurations required for

the system restore are not backed up. Server configuration backup (SCB)

creates and backs up server configuration profiles based on user-defined

schedules.

In

a server configuration backup, the process of taking a snapshot of the

application server’s configuration (both system and application configurations)

is known as profiling.

Two types of profiles used

Base profile: contains

the key elements of the operating system required to recover the server

Extended Profile : typically

larger than the base profile and contains all the necessary information to

rebuild the application environment.

Backup topologies - such as

Direct-attached, LAN-based, SAN-based and mixed backup.

<not

complete ...>

Module 13- Cloud Computing

Cloud Computing: A model for enabling ubiquitous, convenient, on-demand network access to a shared pool of configurable computing resources (e.g. servers, network, storage, application and services) that can be rapidly provisioned and released with minimal management effort or service provider interaction.

According

to NIST, the cloud infrastructure should have five essential characteristics:

·

On-Demand

Self-Service – like provision computing capabilities, view catalogue

via web interface to request for a service

·

Broad

Network Access - Capabilities are available over the network and

accessible from thin or thick clients like mobile, laptop, tablet etc.

·

Resource

Pooling

– to serve multiple customers using multitenant model. Virtualization enables

resource pooling and multitenancy in the cloud.

·

Rapid

Elasticity - Capabilities can be elastically provisioned

and released, in some cases automatically, to scale rapidly outward and inward

commensurate with demand.

·

Measured

Service

- Resource usage can be monitored, controlled, and reported, providing

transparency for both the provider and consumer of the utilized service.

Cloud Enabling

Technologies – Grid Computing, Utility Computing, Virtualization, Service

Oriented Architecture (SOA)

Cloud Service and

Deployment Models : three primary cloud service models

–Software-as-a-Service (SaaS), Platform-as-a-Service (PaaS), and

Infrastructure-as-a-Service (IaaS). Thislesson also covers cloud deployment

models –Public, Private, Community, and Hybrid.

·

Cloud

Service :

Infrastructure-as-a-Service (IaaS) : The

capability provided to the consumer is to provision processing, storage,

networks, and other fundamental computing resources where the consumer is able

to deploy and run arbitrary software, which can include operating systems and

applications. Amazon Elastic Compute Cloud (Amazon EC2) is an example of

IaaS

·

Platform-as-a-Service

(PaaS) : The capability provided

to the consumer is to deploy onto the cloud infrastructure consumer-created or

acquired applications created using

programming languages, libraries, services, and tools supported by the provide.

·

Google App Engine and Microsoft Windows Azure

Platform are examples of PaaS.

·

Software-as-a-Service

(SaaS):

The capability provided to the consumer is to use the provider’s applications

running on a cloud infrastructure.

·

Example Salesforce.com is a provider of

SaaS-based CRM applications.

Deployment Models: cloud

computing is classified into four deployment models—public, private, community,

and hybrid.

·

Public: In a public

cloud model, the cloud infrastructure is provisioned for open use by the

general public. It may be owned, managed, and operated by a business, academic,

or government organization, or some combination of them. It exists on the

premises of the cloud provider.

·

Private : In a private cloud model, the cloud

infrastructure is provisioned for exclusive use by a single organization. It

may be owned, managed, and operated by the organization (on-premise), a third

party (Externally hosted private cloud), or some combination of them, and it

may exist on or off premises.

·

Community: In a community cloud model, the cloud

infrastructure is provisioned for exclusive use by a specific community of

consumers from organizations that have shared

concerns. It may be owned, managed, and operated by one or more of the

organizations in the community, a third party, or some combination of them, and

it may exist on or off premises.

·

Hybrid : the cloud

infrastructure is a composition of two or more distinct cloud infrastructures

(private, community, or public) that remain unique entities, but are bound

together by standardized or proprietary technology that enables data and application

portability (example, cloud bursting for load balancing between clouds).

Cloud computing

infrastructure, challenges of cloud computing: Cloud computing

infrastructure usually consists of the following layers:

· Physical infrastructure: The

physical infrastructure consists of physical computing resources, which include

physical servers, storage systems, and networks.

· Virtual infrastructure:

Virtualization enables fulfilling some of the cloud characteristics, such as

resource pooling and rapid elasticity.

· Applications and platform software: - SaaS ,

PaaS

Cloud

management and service creation tools: The cloud

management and service creation tools layer includes three types of software:

· Physical and virtual infrastructure management

software

· Unified management software - The key function

of the unified management software is to

automate the creation of cloud services.

· User-access management software

Cloud Optimized

Storage: Cloud-optimized

storage typically leverages object-based storage technology. Key

characteristics of cloud-optimized storage solution are:

•

Massively scalable infrastructure that supports large number of objects across

a globally distributed infrastructure

•

Unified namespace that eliminates capacity, location, and other file system

limitations

•